Introduction

Over 100 million people visit Quora every month, so it’s no surprise that many people ask similarly worded questions. Multiple questions with the same intent can cause seekers to spend more time finding the best answer to their question, and make writers feel they need to answer multiple versions of the same question. Quora values canonical questions because they provide a better experience to active seekers and writers, and offer more value to both of these groups in the long term.

Credits: Kaggle

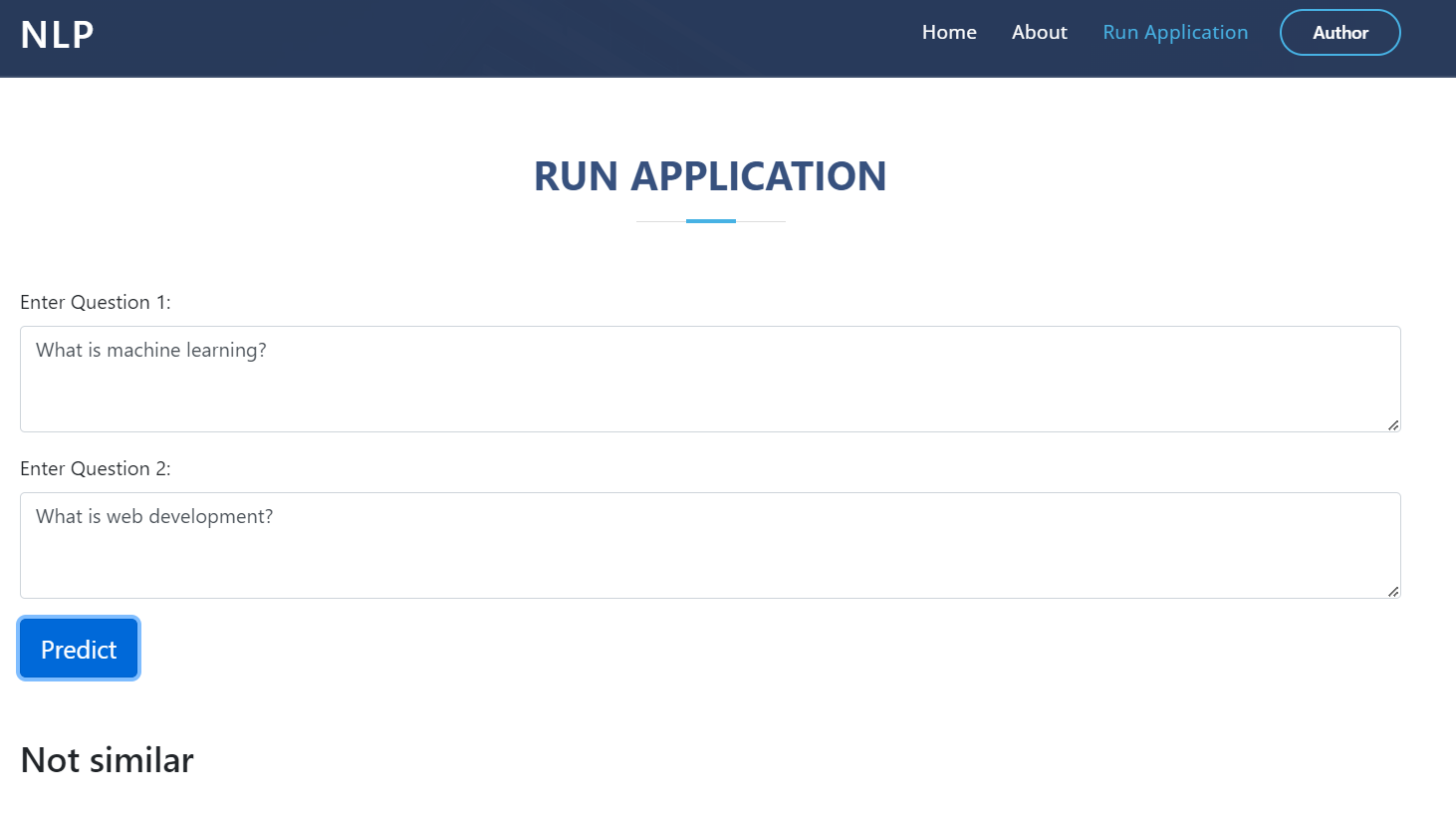

Give it a try here. It might take some time initially to wake up as it is in running on a free tier.

General Information

Identify which questions asked on Quora are duplicates of the questions that have already been asked. This could be useful to instantly provide answers. We are tasked with predicting whether a pair of questions are duplicates or not.

Constraints

- The cost of a mis-classification can be very high. If a non duplicate question pair is predicted as duplicate, then it is going impact the user experience.

- No strict latency concerns.

- Interpretability is partially important.

Metrics

- Log loss

- Confusion Matrix

Data Overview

Data has 6 columns and a total of 4,04,287 entries.

id- Idqid1- Id corresponding to question1qid2- Id corresponding to question2question1- Question 1question2- Question 2is_duplicate- Determines whether a pair is duplicate or not

Data Analysis

Target label distribution

We can observe that data is imbalanced. There are around 64% non duplicate question pairs and 36% duplicate.

We can observe that data is imbalanced. There are around 64% non duplicate question pairs and 36% duplicate.Number of unique and repeated questions

It is as expected that number of questions that are repeated are comparatively lesser than unique questions.

It is as expected that number of questions that are repeated are comparatively lesser than unique questions.Number of occurrences of each question

There is a question that occurs 157 times, as expected most of the questions occurs very less number of times.

There is a question that occurs 157 times, as expected most of the questions occurs very less number of times.Wordcloud for duplicate questions

Wordcloud for non duplicate questions

Preprocessing

- Convert to lower case

- Strip HTML tags

- Remove URLs

- Remove empty lines and extra spaces

- Remove accented characters like ë, õ

- Expand contractions like he’d, she’d

- Remove characters other than alphabets and digits

- Stopword removal (got better results without removing)

- Stemming (got better results without stemming) or Lemmatize (did not try as it is slow)

Feature Extraction

Basic Features

q1_len= length of q1q2_len= length of q2diff_len= absolute difference of length of q1 and q2avg_len= (length of q1 + length of q2)/2freq_qid1= Frequency of qid1’sfreq_qid2= Frequency of qid2’sfreq_q1+freq_q2= sum total of frequency of qid1 and qid2freq_q1-freq_q2= absolute difference of frequency of qid1 and qid2q1_n_words= number of words in q1q2_n_words= number of words in q2diff_word= absolute difference of number of words in q1 and q2avg_words= (number of words in q1 + number of words in q2)/q2first_same= is the first word of both questions samelast_same= is the last word of both questions sameavg_words= (number of words in q1 + number of words in q2)/q2word_common= number of common unique words in q1 and q2word_total= total number of words in q1 + total number of words in q2word_share= word_common/word_total

Advanced Features

cnsc_min= (common non stop words count)/min(length of q1 non stopwords, length q2 non stopwords)cnsc_max= (common non stop words count)/max(length of q1 non stopwords, length q2 non stopwords)csc_min= (common stop words count) / min(length q1 stopwords, length q2 stopwords)csc_max= (common stop words count) / min(length q1 stopwords, length q2 stopwords)ctc_min= (common tokens count) / min(length q1 tokens, length q2 tokens)ctc_min= (common tokens count) / max(length q1 tokens, length q2 tokens)fuzz_qratio- refer blogfuzz_partial_ratio- refer blogtoken_set_ratio- refer blogtoken_sort_ratio- refer bloglongest_substr_ratio= len(longest common substring) / min(length of q1, length of q2)

Distance Features

Obtained word2vec embeddings from spacy library and computed following features

cosine_distancecityblock_distancejaccard_distancecanberra_distanceeuclidean_distanceminkowski_distancebraycurtis_distanceskew_q1vecskew_q2veckur_q1veckur_q2vec

Visualizations of some new features

word_share

We can check from below that it is overlapping a bit, but it is giving some classifiable score for dissimilar questions.

word_common

It is almost overlapping.

token_sort_ratio

Modelling

- Random model gives a log loss of 0.887699

- As log loss is dependent of the value rather than ordering, we can use 0.36 as prediction and it gives a log loss of ~0.69

- Xgboost gave the best results with a log loss of 0.34

Outputs

Competition

- To score better in the quora competition, we can use graph based features i.e. treating every question as a node and find all its neighbors and store it in a adjacent list. Combining both train and test for this purpose will yield much better results. In practice we should not be touching the test set as the main purpose of the model is to see the train data and generalize on unknown data.

- Check out my notebook which uses graph features, glove.840b.300d embeddings with LSTM to result in log loss of 0.189